Bidirectional lstm keras tutorial with example

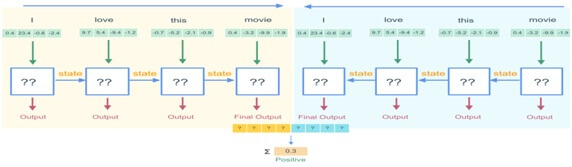

Bidirectional lstm keras tutorial with example : Bidirectional LSTMs will train two instead of one LSTMs on the input sequence. The first on the input sequence as is and the second on the reversed copy of the input sequence. They will provide context to the network and result in fast and full learning on the problem. LSTM processes information from inputs and then passed using the hidden state.The unidirectional LSTM will store information of the past because the inputs are seen from the past.

Using the bidirectional LSTM will manage inputs in two ways,

- One from past to future.

- One from the future to the past.

- And it differs the approach from unidirectional.

- LSTM runs backward to preserve information from the future and use two hidden states combined.

The duplicating of first recurrent layer in the network there are two layers side-by-side.

The limitations will overcome by a regular RNN, we propose a bidirectional recurrent neural network that can be trained using input information in the past and future for a specific task.

The splitting of RNN is responsible for the positive time direction and a part for the negative time direction

The bidirectional LSTM will contains two LSTM layers side by side.

One LSTM is called the forward pass and the other LSTM is reverse of the first one.

The forward pass will contains a positive time dimension and the backward pass contains a negative time dimension.

The outputs of the two LSTM are merged using concatenation.

It shows two LSTM layers one with the forward pass and other one reverse to the first one.

The concatenation operation of the outputs from the two layers.

Two LSTM passed will help in applications like intrusion security due to the dynamic nature of the network.

The continuous flow of data is difficult to classify with a single pass of the LSTM network.

It will increase the false alarm but have two passes which can reduce the false alarm rates which are previously unnoticed.

This type of true positive classification is desirable because even a minimal misclassification will cause the network to become vulnerable.

The convolution layer window size, stride size will affect classification performance.

The BiLSTM have greater effect than convolution layer on accuracy.

They will focus on problem of getting out input sequence by stepping through input time steps in both the forward and backward directions.

Bidirectional lstm keras :-

The bidirectional LSTMs will support in bidirectional layer wrapper.

This wrapper takes a recurrent layer as an argument.

It will allow you to merge mode for forward and backward outputs that is combined before being passed on to the next layer.

Options are,

- ‘sum‘: here the outputs are added together.

- ‘mul‘: here the outputs are multiplied together.

- ‘concat‘: outputs are concatenated together and provide a double number of outputs to the next layer.

- ‘ave‘: average of the outputs is taken.

The problem is the sequence of random values ranges between 0 to 1.

This sequence is taken as input for the problem with each number per time step.

The 0 or 1 is associated with every input and output value which will be 0 for all.

The cumulative sum of the input sequence will exceed a threshold of 1/4 and the output value will switch to 1.

Keras Bidirectional LSTM Example:-

Here create an input sequence of random numbers ranges between [0, 1]

X=array ([random () for_in range (10)])

[0.22228819 0.26882207 0.069623 0.91477783 0.02095862 0.71322527

0.90159654 0.65000306 0.88845226 0.4037031]

Output:-

0000011111

limit = 10/4.0

The cumulative sum can be calculated using python pre-build cumsum () function.

This function will take input as the length of the sequence and returns the x and y components of a new problem statement.

from random import random

from numpy import array

from numpy import cumsum

def get_sequence (n_timesteps):

x=array ([random () for_inrange (n_timesteps))

limit = n_timesteps/4.0

return X, y

X, y = get_sequence (10)

print(X)

print(y)

[0.22228819 0.26882207 0.069623 0.91477783 0.02095862 0.71322527

0.90159654 0.65000306 0.88845226 0.4037031]

[0000001111]

Bidirectional lstm keras Classification:-

The concatenation operation is performed for the values from these LSTMs.

Instead of the Time Distributed layer which receives 10 time steps of 20 output now it receive 10 time steps of 40 outputs.

from random import random

from numpy import array

from numpy import cumsum

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

from keras.layers import TimeDistributed

from keras.layers import Bidirectional

def get_sequence (n_timesteps):

x=array ([random () for_inrange (n_timesteps)])

limit=n_timesteps/4.0

outcome= [0 if x<limit else 1 for x in cusum(X)]

y=array (outcome)

X = X.reshape (1, n_timesteps, 1)

y = y.reshape (1, n_timesteps, 1)

return X, y

n_timesteps = 10

model = Sequential ()

model.add (Bidirectional (LSTM (20, return_sequences=True), input_shape= (n_timesteps, 1)))

model. add (TimeDistributed (Dense (1, activation='sigmoid')))

model. Compile (loss='binary_crossentropy', optimizer='adam', metrics= ['acc'])

for epoch in range (1000):

X, y = get_sequence (n_timesteps)

model.fit(X, y, epochs=1, batch_size=1, verbose=2)

X, y = get_sequence (n_timesteps)

yhat = model.predict_classes(X, verbose=0)

for i in range (n_timesteps):

print (‘Expected:’y [0, i],’predicted’, yhat [0, i])

Epoch 1/1

0s – loss: 0.0967 – acc: 0.9000

Epoch 1/1

0s – loss: 0.0865 – acc: 1.0000

Epoch 1/1

0s – loss: 0.0905 – acc: 0.9000

Epoch 1/1

0s – loss: 0.2460 – acc: 0.9000

Epoch 1/1

0s – loss: 0.1458 – acc: 0.9000

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [0] Predicted [0]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Expected: [1] Predicted [1]

Here we take two LSTMs and run them in different directions.

The information is running from left to right but it is also information running from right to left.

The output of the last node on the LSTM is running left to right and the output from first node on the LSTM running right to left then concatenates them.

This is easy to do in Keras add a bidirectional wrapper.

Arguments:-

Layer:-The recurrent instance.

merge_mode:-The output of forward and the backward RNNs combined.

- Backward_layer:-The recurrent which is used to handle backward input processing and used to generate the backward layer.

- Call arguments:-The call arguments are the same as those of the wrapped RNN layer. The forward and backward is not layer of instance.

Cumulative Sum Prediction Problem:-

It is the simple sequence classification problem to explore bidirectional LSTMs called the cumulative sum prediction problem.

It is divided into the following parts:

- Cumulative Sum.

- Sequence Generation.

- Generate Multiple Sequences.

1. Cumulative Sum:-

The problem is defined as a sequence of random values between 0 and 1.

The sequence is taken as an input for the problem with each number provided once per time step.

The binary label 0 or 1 is associated with each input and output value is all 0.

The cumulative sum of the input values in the sequence will exceeds a threshold and then the output value will flips from 0 to 1.

The threshold of one quarter (1 /4) the sequence length is used.

We will frame the problem to make the use of the Bidirectional LSTM architecture.

The output sequence will produce after the entire input sequence has been fed into the model.

2. Sequence Generation:-

The first step is to generate a sequence of random values.

We also use the random () function from the random module.

Then create a sequence of random numbers in [0, 1] X = array ([random () for _ in range (10)]) Listing

Threshold is carried out as one-quarter the length of the input sequence.

3. Generate Multiple Sequences:-

We define a function to create multiple sequences.

The function named get sequences () will take the number of sequences to generate and the number of time steps per sequence as an arguments.

It calls get sequence () to generate the sequences.

The specified number of sequences has been generated and list of input and output sequences is reshaped to be three-dimensional.